TL;DR

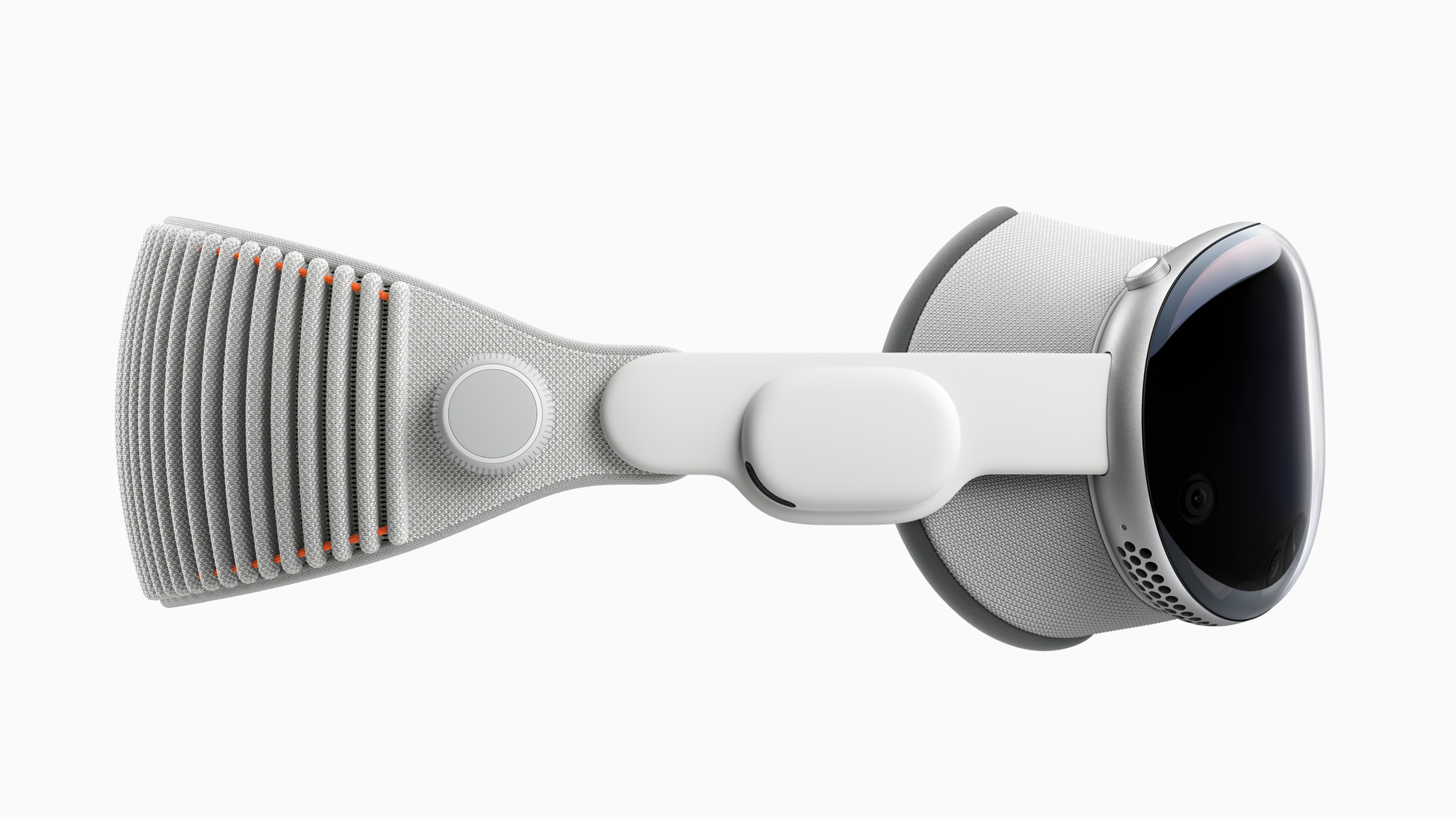

- Apple is preparing to bring to market its new head-mounted display Vision Pro which it describes as a spatial computer.

- Spatial computing is being presented as different from the metaverse though the distinction is moot. It is one in which virtual experiences and content will interact with the physical world in new ways through spatial interfaces, and that in turn will change human-to-computer interactions and human-to-human interactions.

- New head-mounted and hands-free internet gateways could mean the end of the smartphone era.

Today’s phrase is spatial computing, a term adopted by Apple to describe its consumer electronics “wearable,” Vision Pro. But as much as companies like Apple, Sony and Siemens might claim that this initiates a new era, there are those wondering if this might be the metaverse by another name.

So scarred is the tech industry by the failure of the metaverse to take off (and so synonymous with Mark Zuckerberg’s Meta has the name become), that the 3D internet and successor to flat, text-heavy web pages, appears to have been essentially rebranded.

Futurist Cathy Hackl offers this subtle distinction: “Meta is on a mission to build the metaverse, and Quest 2 is their gateway. Apple seems to be more interested in building a personal-use device. One that doesn’t necessarily transport you to virtual worlds, but rather, enhances the world we’re in.”

The term spatial computing has been around at least as long as the term metaverse but is being given a new lease of life by the second coming of augmented reality (AR), virtual reality (VR) or mixed reality (MR) glasses or goggles; the collective term for those acronyms is XR or eXtended reality.

Snap, Sony and Siemens are just some of the companies with new XR wearables due to launch over the next few months. Undoubtedly, all will be a step up in terms of comfort and tech specifications on the early round of such hardware which was led by Google Glass, Meta’s Oculus and Magic Leap.

Apple’s Magical Step Into the Metaverse

“The era of spatial computing has arrived,” said Tim Cook, Apple’s CEO promoting the Apple Vision Pro. In the same sentence he then described it as having a “magical user interface [which] will redefine how we connect, create, and explore.”

Let’s get beyond the smoke and mirrors. There’s no “magic” in the Vision Pro other than a brand name for apps (think Magic Keyboard and Magic Trackpad).

The tech community has, however, been keenly looking toward Apple to bring such a product to market. Having defined and popularized categories for consumer tech, including the tablet and the smartphone, the best bet for XR wearables to go mainstream was always going to come from Cupertino.

Encounter Dinosaurs, a new app by Apple that ships with Apple Vision Pro, makes it possible for users to interact with giant, three-dimensional reptiles as if they are bursting through their own physical space.

One reason why Cook and others prefer the term spatial computing is because there is greater confidence that this iteration of the tech can better blend the actual and the digital world with seamless user interaction.

As Cathy Hackl put it, spatial computing is an evolving 3D-centric form of computing that blends our physical world and virtual experiences using a wide range of technologies, thus enabling humans to interact and communicate in new ways with each other and with machines, as well as giving machines the capabilities to navigate and understand our physical environment in new ways.

From a business perspective, says Hackl, it will allow people to create new content, products, experiences and services that have purpose in both physical and virtual environments, expanding computing into everything you can see, touch and know.

It is an interaction not based on a keyboard but on voice and on gesture. As Apple puts it, the Vision Pro operating system “features a brand-new three-dimensional user interface controlled entirely by a user’s eyes, hands, and voice.”

It’s not “Minority Report” just yet, but you can see where this is headed. Here’s Apple’s description: “The three-dimensional interface frees apps from the boundaries of a display so they can appear side by side at any scale, providing the ultimate workspace and creating an infinite canvas for multitasking and collaborating.”

Its screen uses micro-OLED technology to pack 23 million pixels into two displays. An eye-tracking system combining high-speed cameras and a ring of LEDs “project invisible light patterns onto the user’s eyes” to facilitate interaction with the digital world. No mention is made of having to sign away your right to privacy — this being a pretty invasive aspect of the technology. Do you want Apple to know exactly what you are looking at? If so, expect hyper-personalized adverts pinged to your Apple ecosystem when you do.

Or as Hackl — a tech utopian — writes: “AR glasses will turn one marketing campaign into localized media in an instant.”

Apple’s Competition

Such features are not exclusive to Apple. A new head-mounted display from Sony, designed in collaboration with Siemens and due later this year, also has 4K OLED Microdisplays and an interface called a “ring controller” that allows users to “intuitively manipulate objects in virtual space”. It also comes with a “pointing controller” that enables “stable and accurate pointing in virtual spaces, with optimized shape and button layouts for efficient and precise operation.”

The device is powered by the latest XR processor by Qualcomm Technologies. Separately, Qualcomm has unveiled an XR reference design based on the same chip that features eye tracking technology. The idea is that this will provide a template for third party manufacturers to build their own XR glasses.

The Sony and Apple head-gear are aimed at different markets. Both are hardware gateways to the 3D internet — or the metaverse, even if Apple studiously avoids referencing this and Sony only does so when talking about industrial applications.

Apple Vision Pro is targeting consumers, even if early adopters will have to be relatively well heeled to fork out the $3500 ($150 more for special optical inserts if your eyesight isn’t 20/20).

This Changes… Some Things

Chief applications include the ability to capture stills or video on your latest iPhone, which users will be able to playback in Spatial 3D (i.e. with depth) on their Vision Pro. The video and stills will appear as two dimensionally flat as viewed on any other device.

FaceTiming someone will also be possible in a new 3D style experience within the Vision Pro goggles. According to Apple, this “takes advantage of the space around the user so that everyone on a call appears life-size.” To experience that users will have the choice to choose their own “persona” (which Apple chose to differentiate from Meta’s colonization of the term “avatar”).

In addition, Apple had loaded Vision Pro with TV and film apps from rivals Disney+ and Warner Bros’ MAX (but not Netflix) to be viewed “on a screen that feels 100 feet wide with support for HDR content.” As a reminder, the screen is millimeters from your face.

Within the Apple TV app, users can access more than 150 3D titles, though details are not provided. It could be that these are experimental 3D showcase titles or stereoscopic conversions, in a revival of the fad a decade ago for stereo 3D content.

More significantly, Apple Immersive Video launched as a new entertainment format “that puts users inside the action with 180-degree, 3D 8K recordings captured with Spatial Audio.” Among the interactive experiences on offer in this format is Encounter Dinosaurs.

No details were given of how this content is created or at what production cost, but Sony’s new XR glasses are targeting the creative community.

Indeed, Sony is marketing its development as a Spatial Content Creation System and says it plans to collaborate with developers of a variety of 3D production software, including in the entertainment and industrial design fields. The device includes links to a mobile motion capture system with small and lightweight sensors and a dedicated smartphone app to enable full-body motion tracking.

In Sony speak, it “aims to further empower spatial content creators to transcend boundaries between the physical and virtual realms for more immersive creative experiences.”

Where Is This Headed?

Spatial computing unshackles the user’s hands and feet from a stationary block of hardware and connects their brains (heads first) more intimately with the internet.

Hackl thinks Vision Pro is the beginning of the end for the traditional PC and the phone.

“Eventually, we’ll be living in a post-smartphone world where all of these technologies will converge in different interfaces. Whether it’s glasses or humanoid robots that we engage with we are going to find new ways to interact with technology. We’re going to break free from those smartphone screens. And a lot of these devices will become spatial computers.”

She thinks 2024 will be an inflection point for spatial computing.

“Eventually you’ll have a spatial computing device that you can’t leave the house without,” she predicts, “because it’s the only way that you can engage with the multiple data layers and the information layers and these virtual layers that will be surrounding the physical world.”

She admits that right now “there’s a bit of chaos” and that Apple Vision Pro may not be the breakthrough everyone expects in its first iteration.

“To me, the announcement of Apple offers a convergence of the idea of seamless interaction, breaking through the glass and a transformation from social media-driven AI to personal, human AI,” she says. “Will all that happen with the release of Apple’s first headset? No, and I wouldn’t expect it to. That’s a lot to put on one company’s shoulders. But Apple is different from other headset makers which gives us an opportunity to see a different evolution of AR.”