TL;DR

- Production on “Fallout” leveraged virtual production technology to create a compelling adaptation of the popular video game franchise, collaborating with Magnopus to execute the ICFX for Season 1.

- The majority of the production crew were new to virtual production, but producer Margot Lulick led efforts to understand how to best leverage the LED volume during the creative process.

- AJ Sciutto, director of virtual production at Magnopus, explains how the LED volume used for “Fallout” served as both as a technical tool and a story point.

Creating the world of “Fallout”

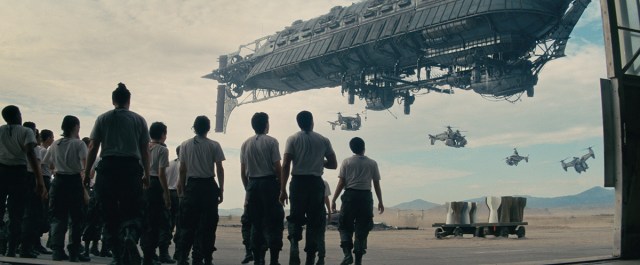

The team behind Prime Video’s Fallout series utilized virtual production technology to create a compelling adaptation of the video game franchise.

Kilter Films’ formula appears to be a winning one, with Amazon MGM Studios announcing season two within days of the streaming debut. “The bar was high for lovers of this iconic video game,” studio head Jennifer Salke noted.

To bring the world of Fallout “from console to camera,” executive producers Jonathan Nolan and Lisa Joy turned to LA creative studio Magnopus to execute the in-camera visual effects for season one.

READ MORE: Magnopus brings Amazon’s Fallout series to life with virtual production powered by Unreal Engine (Unreal Engine)

“Shooting on [35mm] film undoubtedly brought a unique and beautiful aesthetic to the show by softening details, accentuating the artistic grit, and creating separation between the layers of the wasteland,” said AJ Sciutto, director of virtual production at Magnopus, in an interview for the Unreal Engine website.

On the other hand, Sciutto continued, “Producer Margot Lulick made sure that proper attention was given to the LED portions of the shoot. ICVFX was new territory for the majority of the production team, but Margot and her team spent time learning about the process to ensure that we were leveraging the best parts of the volume creatively and shooting efficiently within it.”

Sometimes, VP Just Makes Sense for the Story

Additionally, leveraging the visualization process early meant that their team was able to emphasize that the show’s “imagery should support that narrative conceit” of dystopian fiction, even for dialog scenes that might have otherwise been overlooked.

However, Sciutto pointed out that “the story lent itself to the technology of the LED volume, as in the series, a Telesonic projector simulates a Nebraska cornfield landscape within the Vault; so the LED volume is used both as a technical tool and a story point. This allowed Jonathan Nolan and the showrunners to have some fun with the idea of playing with the time of day mid-shot.”

“For example, he said, “During the wedding scene in the cornfield, the Overseer has a line that indicates the change from dusk to nighttime, animating a moonrise mid-shot. It also allowed us to play with beautiful FX elements on the wall during the battle between Vault 33 and the surface dwellers.

“When the projectors are hit by gunfire, our Houdini team, Justin Dykhouse and Daniel Naulin, built out a film-burn effect that eventually took over the entire screen.” “For example, he said, “During the wedding scene in the cornfield, the Overseer has a line that indicates the change from dusk to nighttime, animating a moonrise mid-shot. It also allowed us to play with beautiful FX elements on the wall during the battle between Vault 33 and the surface dwellers.

“When the projectors are hit by gunfire, our Houdini team, Justin Dykhouse and Daniel Naulin, built out a film-burn effect that eventually took over the entire screen.”

To achieve that, he said, “We captured references of film burning, conducted online research on the film types in the 50s and their distinctive burning patterns, and created a captivating effect that gradually spread to all three walls of the vault.”

Integrating VP From the Beginning (Really)

Conversations about the project actually began while the Kilter principals were still in production for HBO’s Westworld, which enabled VP technology to be leveraged throughout Fallout’s pre-production stages, as well.

For Fallout, the Magnopus team consisted of 19 artists who built virtual sets; seven operators for the LED wall, integrated camera tracking and programming lighting systems; and the remaining 11 people filled roles in software engineering, creative direction and production oversight, by Sciutto’s count.

Sciutto explained, “We provided creative input for leveraging virtual production across the entire show and worked with the filmmakers to break down the scripts into scenes and environments that would benefit the most from ICVFX.

“We landed on four environments — the farm and vault door scenes in Vault 33, the cafeteria setting in Vault 4, and the New California Republic’s base inside the Griffith Observatory. Anything happening in a Vertibird was a great candidate for LED process shots.”

Designing and Building Out the Volume

Fallout both benefited from and was challenged by being an early VP adopter, in that there were not dozens of prebuilt stages and volumes to choose from, but they were also ultimately able to influence a design that was just right for their production’s needs.

They turned to Manhattan Beach Studios to house their horseshoe-shaped volume, which measured 75 feet wide, 21 feet high, and nearly 100 feet long.

“Our intention was to design a stage that gave the filmmakers the lighting benefits of a curved volume, in addition to the flexibility of longer walk and talks offered by straight sections of a flat wall,” Sciutto explained. He added that they created “a modular and flexible LED stage.”

Due to the data- and power-intensive nature of virtual production, they also contracted Fuse Technical Group to design and build a custom server room and set up the video distribution on set, ultimately creating the server room in a shipping container that was sent to the stage in Bethpage on Long Island, New York. The server room housed six operator workstations as well as 18 5K outputs, including 12 for the main LED wall and six for the wild walls.

Thorough (and Effective) Visualization

The visualization team (helmed by Kalan Ray and Katherine “Kat” Harris) created a 3D replica of all the physical and virtual Fallout sets, enabling the team to craft “the whole scene and compose meaningful shots that informed every department on the show.”

“We worked with Howard Cummings and Art Director Laura Ballinger to determine which pieces of the set should be built physically and which should be built virtually,” Sciutto said.

“These decisions had an enormous impact on lighting and VFX, so we used concept art and storyboards to coordinate on unifying the two worlds and figure out exactly where the physical/virtual transitions would be.”

Volume art director Ann Bartek “provided the exact materials, colors, and textures of the physical set builds so the virtual set builds could match,” according to Sciutto.

Next, they “built a full-scale model of the actual warehouse in Bethpage, Long Island, and fit the true dimensions of the LED wall into it. This helped us plan not just the creative aspects of the show,” Sciutto said, “but also helped us identify where to place pick points for the stunt team, where to install overhead lighting, and even where the Unreal operator desks should be.”

VP lead Harris “set up the initial blocking and integrated multi-user systems to allow the filmmakers to dive in alongside our animators and start moving pieces around in real time,” he said.

Sciutto noted they were able to leverage Unreal’s virtual scouting tools so that the filmmakers could “place cameras and characters in precise locations and even lens the shot in real time. We could then save that information and use it to create a heatmap of the environment.”

Additionally, they “could carve the scene up in modular ways that allowed us to hide computationally expensive set pieces when they weren’t on camera.”

Their efforts also meant that they inadvertently “discovered that the dimensions of the physical doors were too small to fit some pieces of the Vertibird through,” Sciutto recalled.

Ultimately, he said, “Maximizing the effectiveness of the LED volume meant visualizing scenes you wouldn’t typically see in a previs pass – like dialog scenes – because it helped ensure we brought the fantastical elements of the story into frame.”

Cine Solves and the Volume DP’s Role

While many things were made easier by the virtual production workflows, not every technology choice simplified processes for Fallout.

(The decision to shoot on 35mm, for example.)

“The lack of a digital full-resolution monitor on the camera meant that we had to be creative and very precise about dialing the look of the images on the LED wall,” Sciutto said.

“Our solution was to apply the film LUT of the show to the output of a Sony Venice camera, using it as a reference to adjust lighting and match color with a combination of OpenColorIO and color grading tools inside of Unreal Engine.

“We measured the color temperature of the scenes on the LED Volume and the foreground set lighting with a color spectrometer for each lighting change to ensure a match.”

They also tapped virtual gaffer Devon Mathis to coordinate with both episodic directors of photography and the volume DP Bruce McCleery “to adjust the nit values, white balance, and color values of the LEDs to balance with the rest of the scene on a shot by shot basis.”

Sciutto said that after Fallout, their team has started to “recommend a dedicated volume DP for every large ICVFX production moving forward, particularly episodic work.”

They also tested as much in advance “as the schedule permitted,” Sciutto said. On pre-light days, “we dialed the output of the LED wall with set lighting and shot the result using the camera body and film stock of the show. We’d bracket these tests with the camera’s exposure, as well as with Unreal’s manual exposure tools.”

That information enabled them “to measure at what point our digital reference camera fell out of sync with our film stock, and what adjustments we could make to keep everything looking great in a variety of lighting scenarios. For work like this, using Lumen in Unreal allowed us to make the virtual lighting just as available as the physical lighting was on set.”

He added, “Lumen is the most critical piece of Unreal that made Fallout come together. We experienced a significant drop in on-set downtime after moving away from baked lighting and could iterate on lighting changes in real time with the DP.”

In fact, Sciutto noted, “During the entire LED wall production phase, we had a 99% uptime, only suffering one nine-minute delay. This gave the showrunners and ADs confidence to schedule the volume shots aggressively.”

Why subscribe to The Angle?

Exclusive Insights: Get editorial roundups of the cutting-edge content that matters most.

Behind-the-Scenes Access: Peek behind the curtain with in-depth Q&As featuring industry experts and thought leaders.

Unparalleled Access: NAB Amplify is your digital hub for technology, trends, and insights unavailable anywhere else.

Join a community of professionals who are as passionate about the future of film, television, and digital storytelling as you are. Subscribe to The Angle today!