READ MORE: The Broadcast and Live Events Field Guide (Epic Games)

Traditional content creation, live event, live streaming, and live broadcast workflows are incorporating — and, in some cases, being reconfigured by — game engine technology. In particular, the real-time processing of music, animation and other assets for which games engines were built has reached a point where the technology can facilitate real-time experiences in many film, TV and live event content pipelines.

It’s an unfamiliar concept for many in the industry and a new guide from Epic Games reviews many of the key elements.

As Epic explains, there are those doing truly live, real-time mixed reality work that can harness the engine to serve interactive elements of video games. Others commonly use it to produce the types of elaborate and highly detailed pre-rendered assets once served by traditional motion graphics pipelines. And in the events space, production companies are delivering pre-rendered assets that can use a game engine to create and deliver those assets in a live environment.

The Broadcast and Live Events Field Guide is designed to inspire producers to explore real-time mixed reality content (and to use Unreal Engine). It also includes advice related to training, the relevance of existing skill sets, and other considerations that impact the planning, production and delivery of live real-time experiences.

Here are the salient points NAB Amplify has selected from the guide.

What Is a Game Engine?

A game engine is a software framework for both creating and delivering games — it encompasses not only the tools for game developers to construct a world and program gameplay, but also a means to compile these games into a format that can be served to consumer devices for gameplay.

Game development is a deeply collaborative process, where animators, coders, writers, artists, audio specialists, and many more come together to build interactive worlds. A game engine serves as a central hub that brings their contributions together.

As interactive entities, video games are defined by their real-time nature. They have to respond instantly to a constant stream of user inputs, providing highly polished experiences that dynamically adapt while remaining as a cohesive whole.

Real-time content creation is the result of generating visual media from some combination of image assets, code, and external data on demand.

Real-Time Technology in Broadcast and Live Events

As game engines advanced, it became clear that they were perfect for the emerging field of real- time graphics in broadcast and live events. For example, when The Famous Group unleashed a giant digital panther on the Carolina Panthers NFL team’s stadium, the company created a real-time experience that was thoughtfully mixed with reality. While that highly collaborative process involved disciplines like camera operation and live streaming to stadiums, it remains fundamentally comparable to making a video game, highlighting the reason that game engines are applicable to such projects.

READ MORE: Here’s the tech behind the Carolina Panthers’ giant AR cat (The Verge)

And so it is that we find ourselves at a point where broadcasts and events can inherit much of the real-time potential of games, mixing up the reality of a wide range of live experiences.

That’s not to say that the solution is simply to pick up a game engine and work like a game developer. Rather, over time, industry shifts and technological evolution have seen a trend play out that now means game engines are commonly the most suitable option for broadcast and live events.

Merger of Linear and Nonlinear

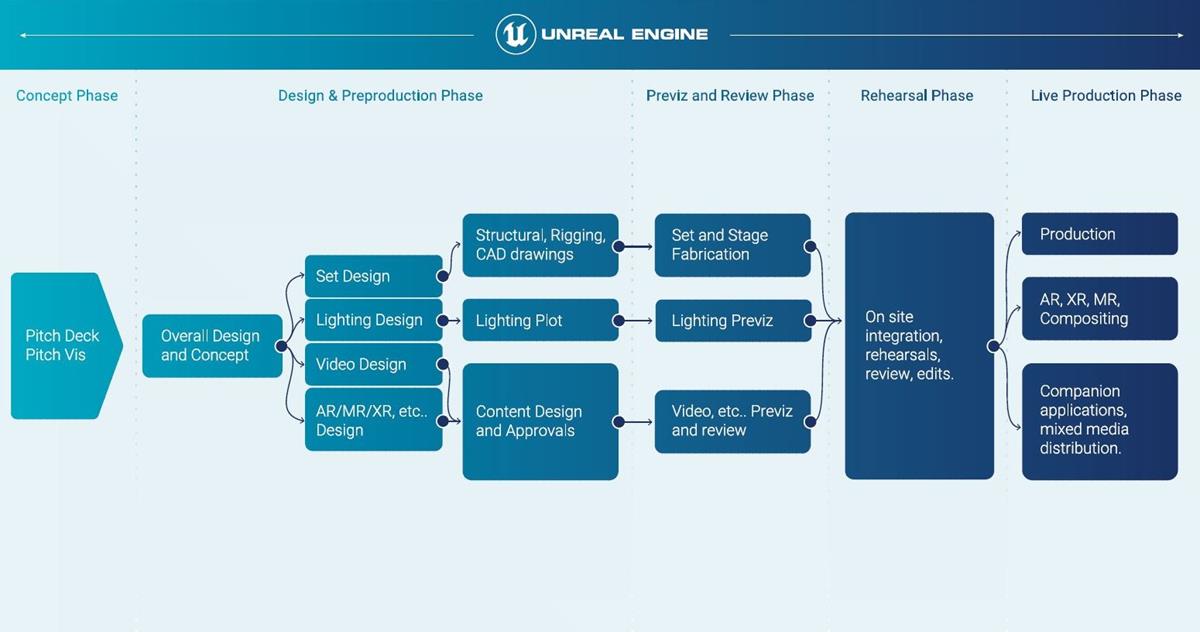

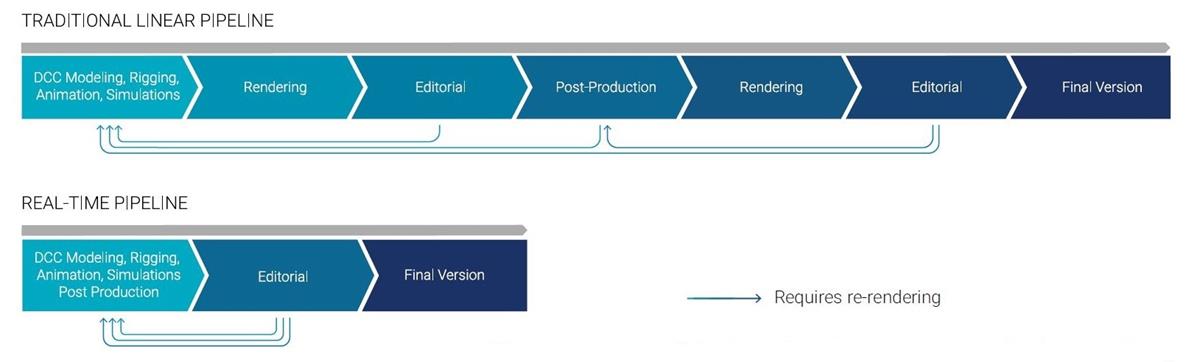

A traditional linear pipeline focuses on pre-rendered assets, meaning it better serves projects or parts of projects that demand much more precise control over final asset details, where rapid iteration or interactivity is not required.

Real-time, meanwhile, offers immediate results and iterations, reducing the time to render to almost zero, while allowing for interactivity of content.

As such, real-time pipelines introduce a significant shift in design approach and content creation possibilities. That means content can be rapidly and reliably adjusted right up until delivery, enabling, for example, assets to be reworked to fit atypical or changing display hardware.

A game engine’s core ability to manage both true real-time and pre-rendered assets means it offers a highly suitable solution.

Mixing Realities

Live productions can now mix realities, whether AR or MR, broadcast or streamed, and there are also MR experiences those that include an on-site audience.

The common theme across all these types of projects, says disguise Solutions manager Peter Kirkup, is that they come down to complex pixel manipulation.

“Often, we’re working on projects where pixels take a weird configuration on a stage, something very different from a standard 16 x 9 monitor,” he says. “These non-standard setups are where we really add value. And we can’t really add that value with a rigid or ‘standard’ pipeline.”

disguise Solutions serves clients that might need to send pixels to a vast moving stage space as seen on the likes of Eurovision Song Contest or the BRIT Awards, where highly unusual aspect ratios may change on the fly. It’s a striking example of how much variety modern real-time pipelines need to adapt to.

“For us, a pipeline is just a combination of layers in the timeline, layers that we composite and blend.”

— Erik Beaumont, The Famous Group

Epic emphasizes that there is no one-size-fits-all approach when it comes to building a real-time pipeline. Rather, such pipelines from broadcast and live events need to be flexible.

“For us, a pipeline is just a combination of layers in the timeline, layers that we composite and blend,” says Erik Beaumont, head of Mixed Reality at The Famous Group.

Kirkup agrees: “We think of Unreal Engine as a layer on that timeline, a layer that we can comb for assets and even textures, and bring them into the worlds we support.”

Building out assets might include mapping content onto surfaces or cutting pixels out from a render that’s happening in Unreal Engine in real time and putting those pixels on a different part of the stage. The team might also bring in other layer types as textures, such as pre-rendered imagery or web-based content from an HTML5 source.

The difference, says Beaumont, comes in when you consider the aesthetics and visual quality of the output. “You need to combine these two worlds where all your design and creative is living in the broadcast space,” he says, “but your technology is all living in a space similar to game development.”

Virtual Production

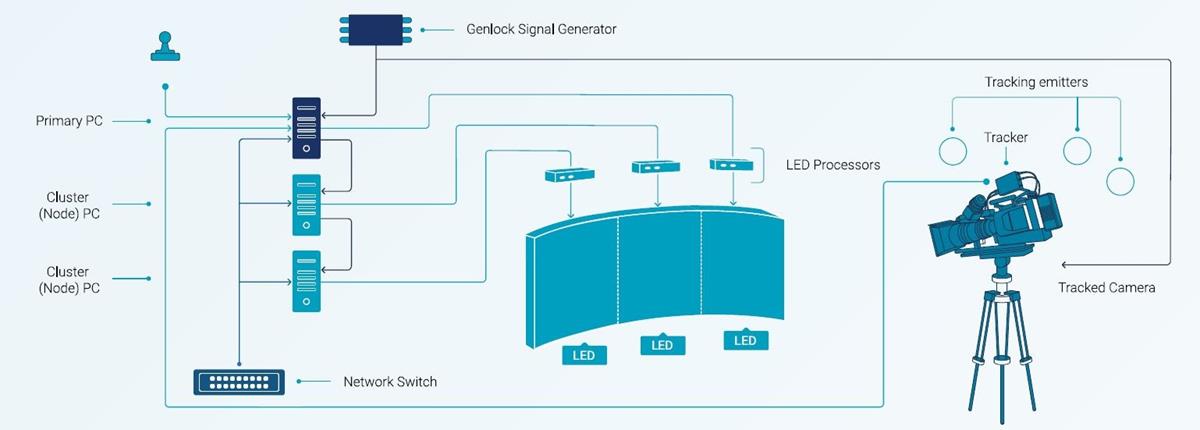

In virtual production there is a move to “in-camera visual effects” (ICVFX) models. In those cases, a shoot might be based around large LED volumes that display realistic output from real-time engines behind performers. Images on the screens move in synchronization with real-world camera tracking as actors are followed.

This approach can produce final-pixel imagery completely in camera — the current state of the art method for virtual production, and a means to help cast and crew on set visualize the digital world around them, rather than have to make guesses in a green-screen volume. In those cases, though, much would still be done in post; the physical complexity of ICVFX favors productions that get generous attention in post, rather than for straightforward linear live broadcasts.

Further into the future, though, Epic foresees ICVFX playing some role in live productions.

Real-time mocap models are also becoming more practically workable, which presents an option for live productions where a performers’ movements could be captured in real time, guiding the movements of an animated character during an event.

Meanwhile, LED walls and motion capture are sometimes used in live and broadcast real-time applications as a visualization tool in the early stages of a workflow. Technological advances will likely make LEDs and mocap more commonplace in live contexts.

“However, the fact that live broadcasts need to be technically robust and truly final pixel in the moment of the shoot or performance means a more significant reliance on more proven approaches such as green screen.”

New Roles, New Skills

In almost every interview conducted for the guide, it came up that traditional broadcast and event roles and skills still remain highly relevant, and retraining is only about augmenting and tuning existing staff’s expertise.

Building a knowledge base about game engines and new technologies is important, but at the same time, the fundamentals of understanding audience, storytelling, and engagement in existing TV, broadcast, film, video, and event sectors can be broadly applied to real-time.

Epic says that adopting even just a few game development practices will give a significant boost to any real-time project. It recommends that broadcast and event teams experiment by building their own game as an internal project.

“It’s a powerful way to enable your entire team to explore the fundamentals of real-time process, practice, and technology” — over and above hiring in specialists.

Real-time specialists are needed, as is basic familiarity with the core concepts of MR technology, but it’s equally important to bring storytelling skills to the foreground.

“Ultimately, and at this stage with real-time technology, hiring experts to complement your team is likely more sensible than restructuring or reinventing your existing team.”

Epic Games

Many speak to the value of securing a “system integrator” or similar team that can handle the technical side of connecting content with infrastructure, while also serving as a go-between who smooths the interactions between hardware people, performers, and content providers.

Elsewhere, many virtual MR productions are happening with a “screen producer” sitting high in the food chain, an individual effectively charged with taking responsibility for what appears on screen, and thus coordinating the related technology and teams.

Other professionals consulted for the field guide put forward the idea of a generalist “Unreal Engine specialist” or even “Unreal Engine wizard,” meaning somebody who can understand the game engine and its interaction and integration with the wider ecosystem both in rehearsals and on the go-live day.

“None of those roles are established or recognized as including a specific skill set or authority within production hierarchies. The best way forward may simply be to give more time than you might imagine to establish a team hierarchy while pursuing specialist hires.”

Challenging Misconceptions

Real-time does not necessarily mean cheaper or saving time. It is a complex process, which is where expert knowledge comes in.

“Many productions thrive when served by a floating liaison who is adept with real-time technology and can move between creative, hardware, artist, and venue teams. Elsewhere, screen producers with comparable skills have a senior position and take responsibility for everything appearing on screen, an alternative framing of a go-between for various on-site teams.”

Many speak to the value of securing a “system integrator” who can handle the technical side of connecting content with infrastructure, while also serving as a go-between who smooths the interactions between hardware people, performers, and content providers.

“Ultimately, and at this stage with real-time technology, hiring experts to complement your team is likely more sensible than restructuring or reinventing your existing team,” recommends Epic.

Sometimes real-time broadcast and live events productions can go from initial idea to completion in staggeringly short time frames. Other real-time projects may take months of planning and implementation.

“Don’t pick a real-time approach just to tick a box or impress your clients with your toolset. It’s not an all-or-nothing approach — you get to pick exactly where, or in what capacity, it will fit your project.”

— Epic Games

For example, a real-time-embellished music tour might require constant updates and maintenance, and for years. The most ambitious projects can require long periods of planning simply to get started.

Equally, while some real-time productions cost relatively little, others might demand vast custom stages, or towering bespoke LED screens or projection systems.

The solution here, as much as there is one, is to free yourself from assumptions that real-time always means time and money savings. A more accurate statement is that going with real-time technology offers more options for engaging your target public and can lead to stronger relationships with audiences that benefit you in other ways — sold-out shows, great reviews, returning customers, or word-of-mouth marketing.

“Although a fundamental understanding of real-time media servers’ function, role, and essential workings is helpful, for now the reality of such productions likely means partnering with an individual provider who will deliver both physical infrastructure and expertise — and even on-site staff in many cases.”

Epic suggest that clients, artists, or brands might often push for real-time because they have seen rivals succeed with it. But to adopt real-time methodologies and approaches simply for its own sake — practicing “theater of real-time” — is never advisable, and could lead to less impact, more time and budgetary sink, or even failure to deliver on a brief.

“Don’t pick a real-time approach just to tick a box or impress your clients with your toolset,” says the company. “It’s not an all-or-nothing approach — you get to pick exactly where, or in what capacity, it will fit your project.”

Should you complete your project entirely in traditional pre-rendered workflows or leverage real-time platforms only when a project calls for high levels of interactivity or reactivity, remember that the choice is entirely up to you. “Essentially, pick real-time in the desired capacity because it is adding value to the experience, to the workflow, or to your time-cost analysis considerations.”

If you have concerns about the knowledge required and learning curve, Epic suggests you experiment in-house to test the waters.

Some even advocate for making a simple video game to understand the fundamental concepts — consider that today, code-free game development platforms exist that are aimed at children under the age of 10. “Such a project may be more welcoming and achievable than you assume,” Epic says.

Consider the gains game engines and real-time workflows can bring, and endeavor to explore their potential through practice, because once you or your team get the hang of it, it’s very hard to go back!