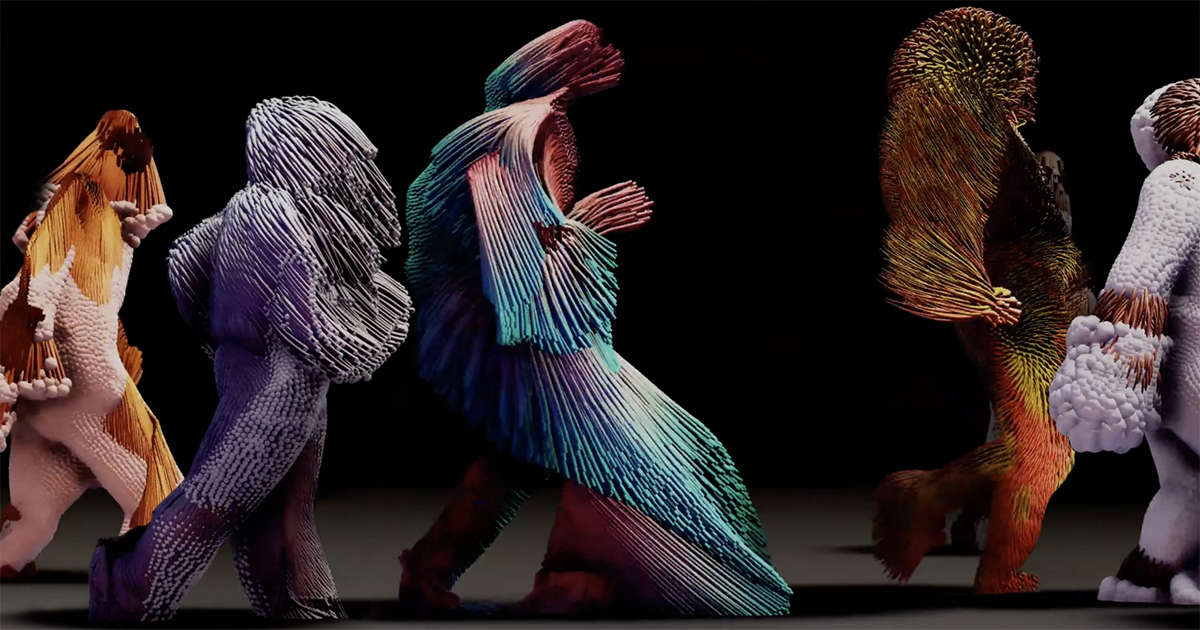

Director Neill Blomkamp has embraced volumetric capture for “simulation” or dream scenes in new horror feature Demonic — the most significant use of the technique yet.

Volumetric capture (which is also referred to as lightfield, or computational cinematography) involves recording objects or people using arrays of dozens of cameras. The data is then processed to render a three-dimensional depth map of a scene whose parameters including camera focal length can be adjusted in post.

The director of District 9 has a background in visual effects and says he was fascinated by the challenge of using the technique to create an entire narrative feature.

It’s an experiment with a number of important lessons. The main one being that the technology needs to advance before it becomes as mainstream as virtual production.

Tech Details

For the project, Volumetric Capture Systems (VCS) in Vancouver built a 4m x 4m cylindrical rig comprising 265 4K cameras on a scaffold. That was supplemented by “mobile hemispheres” with up to 50 cameras that would be brought in closer for facial capture.

Viktor Muller from UPP was Demonic’s visual effects supervisor (and an executive producer on the film). The filmmakers also employed the Unity game engine and specifically its Project Inplay, which allows for volumetric point cloud data to be brought into the engine and rendered in real time.

The data management and logistics “was an absolute goddamn nightmare,” Blomkamp shares in interview with befores & afters. The team were downloading 12 to 15 Terabytes daily. Even to process that in order to keep the show on schedule, Blomkamp had to add another 24 computers to those at VCS.

Acting inside of such a confined space (ringed by cameras, remember) was also a hard task for the actors Carly Pope and Nathalie Boltt.

“If they were semi-underwater, maybe that would be the only thing that would make it worse,” he acknowledges. “So, hats off to the actors for doing awesome work in that insane environment.”

Nor could the director actually view the performances on a monitor in real time. Since it was taking a day to calculate the data from cameras there was nothing to see.

“That means you don’t get any feedback from the volume capture rig. You’re just sitting around like a stage play, basically.”

READ MORE: Neill Blomkamp on what volumetric capture brought to his new horror film, ‘Demonic’ (befores & afters)

However, it was the inability to capture sufficiently high resolution data necessary for filming a narrative drama that proved the trickiest problem to surmount. If the cameras were brought a few centimeters from an actor then high-resolution is certainly possible — “You may even see individual strands of hair” — but trying to use more of the conventional framing of close-up, medium and wide meant “an exponential drop-off in resolution.”

In tests, what resulted was a glitchy lo-fi look, which Blomkamp turned to his advantage by making it core to the story. In Demonic, the vol-cap scenes are presented as a “nascent, prototype, early development, VR technology for people who are in comas, or quadriplegic situations. I think in the context of the movie, it works.”

The captured material included RGB data presented in a series of files or geometry meshes.

“The idea of taking that and dropping it into Unity, and then having it live in a 3D real-time package where we could just watch the vol-cap play, and we could find our camera angles and our lighting and everything else — I mean, I love that stuff. That’s exactly what I wanted to be doing, and the narrative of the movie allowed for it.”

Blomkamp describes the post-process of direction as “like old-school animation, where you’re just hiding and un-hiding different objects over a 24-frame cycle per second. And then you just keep doing that.”

If it sounds tortuous, Blomkamp wouldn’t disagree but he feels that, given advances in computer processing power, the technique will get faster and the data easier to sculpt.

“The whole point of real-time cinema and virtual cinema is to be able to be freed from the constraints of day-to-day production… that you can be in a quiet, controlled post-production facility and load up your three-dimensional stuff in something like Unity, grab a virtual camera, and take your time, over weeks, if you want, to dial it in exactly the way that you want. So, in that sense, I don’t really think it matters that there’s a delay between gathering your vol-cap data and then crunching it down to 3D, so you can drop into your real-time environment.

“I think what does matter, though, and what is a huge issue, is how you capture it, in this highly restrictive, absolutely insane way that it currently has to be done. That’s what will change.”