BY ROBIN RASKIN

TL;DR

- Now that the initial knee-jerk reactions to having Generative AI as our companions have quieted down a bit, it’s time to get to work and master the skills so that Generative AI is working for us, not the reverse.

- The Kevin Roose shockwave, goaded every tech columnist to write something about how they broke AI, through a combination of provocation and beta testing the hell out of publicly released platforms.

- Educational institutions are trying to figure out whether to ban Generative AI or teach it to their students. We’re rolling up our collective sleeves for the human/machine beta test.

CONTINUE THE CONVERSATION: Join Robin Raskin and Jim Louderback at NAB Show for a series of sessions focused on the creator economy.

Now that the initial knee-jerk reactions to having Generative AI as our companions have quieted down a bit, it’s time to get to work and master the skills so that Generative AI is working for us, not the reverse. The Kevin Roose shockwave, goaded every tech columnist to write something about how they broke AI, through a combination of provocation and beta testing the hell out of publicly released platforms like Bing AI, Google’s Bard, and the wildly popular ChatGPT.

In the early days of ChatGPT’s general release, Cnet had some faux pas including plagiarism and misinformation seeping into its AI-generated journalism. This week Wired magazine very carefully spelled out its internal rules for how it will incorporate generative AI in its journalism. (No for images, yes for research, no for copyediting, maybe for idea generation.) Educational institutions are trying to figure out whether to ban it or teach it to their students. We’re rolling up our collective sleeves for the human/machine beta test.

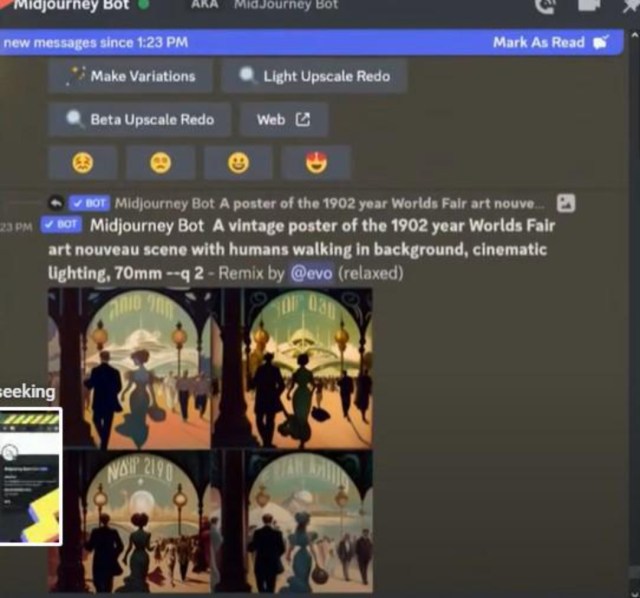

Meanwhile, folks like Evo Heyning, creator/founder at Realitycraft.Live, and author of a lovely interactive book called PromptCraft has been doubling down to dissect, coach, and cheer us into the world of using Generative AI effectively. The book, co-written with a slew of AI companions like Midjourney, Stable Diffusion, ChatGPT, and more, looks at the art, science, and lots of iterations that will help get the most out of the creative man/machine communications. You can watch some of her fast-paced Promptcraft lessons on YouTube. They’re kind of the AI-generative of the arti-isodes of Bob Ross on PBS.

A Magic Mirror for Collective Intelligence

Heyning has worked in AI, as a coder, storyteller, and world-builder since the early days of experimentation. She’s also been a monk, chaplain, and just about everything else that defines a renaissance woman who thinks deeply about AI. “Are the models merely stochastic parrots that spit back our own model? Or are they giving us something that’s a deeper level of comprehension?” she asks.

“AI,” she continues, “is like querying our collective intelligence. Right now most of our chat tools are mirrors of everything that they’ve experienced. They’re closer to asking a magic mirror about collective intelligence than they are about any sort of unique intelligence.”

Our jobs are to learn the language or the query to coax the best out of the machine. “AI Whisperers,” those who can create, frame, and refine prompts are out of the gate with a valued skillset.

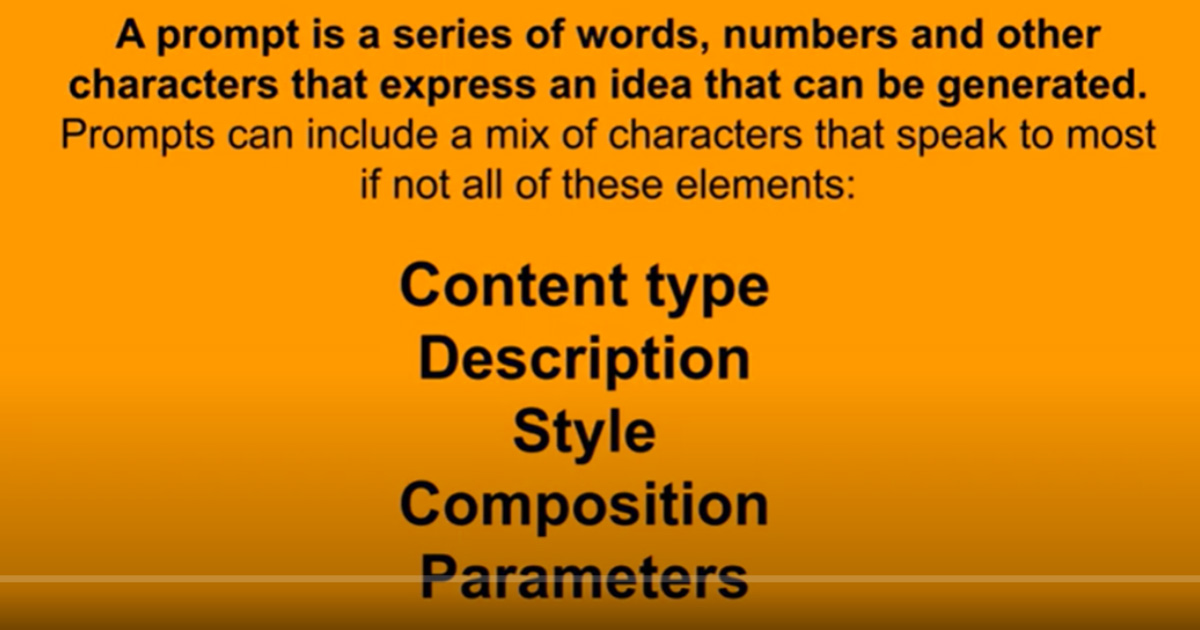

While prompts for generating text, images, movies, and music will vary, there are certain commonalities. “A prompt,” says Heyning, “starts by breaking down the big vision of what you’d like to see created, encapsulating it into as few words as possible.” She likens a lot of the process to a cinematographer calling the shots. “You’re thinking about what the focal point of your creation will be. The world of the prompt is about our relationships with AI, and it includes shifts in language that come from both sides, not just from the human side, but also from alternative intelligences.”

Five Easy Pieces

Heyning talks about her process of including five pieces in a prompt. They include the type of content, the description of that content, the composition, the style, and the parameters.

- Content Type: In art prompts, the type of content might be a poster or a sticker. For text it might be a letter or a research paper.

- Description: The description of the content defines your scene (a frog on a lily pond).

- Composition: The composition is the equivalent of your instruction in a movie (frog gulping down a fly or in the bright sunshine).

- Style: The style might be pointillism, (or for text the style of comedy writing).

- Parameters: Finally, the parameter might be landscape or portrait, or, for text a word count.

Providing context is also a key component. Details about the setting, characters, and mood help you get the image you had in your mind’s eye. “Negative weights” — things that should not be in your creation can be important, too. Heyning discourages the use of using artists, especially living artists, names in the prompt. These derivatives beg copyright questions and remind us to use commas in our prompts to make them more intelligible to the machine. “They act as separators to help the generator parse a scene.”

Heyning’s quite the optimist about how humans and AI will work together, even in much-debated areas like education. “Kids are learning about art history from reading prompts created using Midjourney,” she marvels. “They are introduced to impressionism, realism and abstract art. They’re using terms like knolling (knolling is the act of arranging different objects so that they are at 90-degree angles from each other, then photographing them from above), once relegated to the realm of trained graphic designers.”

What I learned from my crash course in prompting? The power of a good prompt is the power of parsimonious thinking — getting to the essence of what you want to create. Similar to coding, but different, because you don’t need to learn a foreign language, this is a much more Zen-like effort. Stripping away all that’s unnecessary; down to the perfect phrase. (P.S. If you prompt ChatGPT to tell you how to write the perfect prompt you’ll read even more about the Art of the Prompt.)

This story was originally posted on Techonomy.

AI ART — I DON’T KNOW WHAT IT IS BUT I KNOW WHEN I LIKE IT:

Even with AI-powered text-to-image tools like DALL-E 2, Midjourney and Craiyon still in their relative infancy, artificial intelligence and machine learning is already transforming the definition of art — including cinema — in ways no one could have ever predicted. Gain insights into AI’s potential impact on Media & Entertainment in NAB Amplify’s ongoing series of articles examining the latest trends and developments in AI art

- What Will DALL-E Mean for the Future of Creativity?

- Recognizing Ourselves in AI-Generated Art

- Are AI Art Models for Creativity or Commerce?

- In an AI-Generated World, How Do We Determine the Value of Art?

- Watch This: “The Crow” Beautifully Employs Text-to-Video Generation