READ MORE: Can A Metaverse AI Win America’s Got Talent? (And What That Means For The Industry) (Bernard Marr)

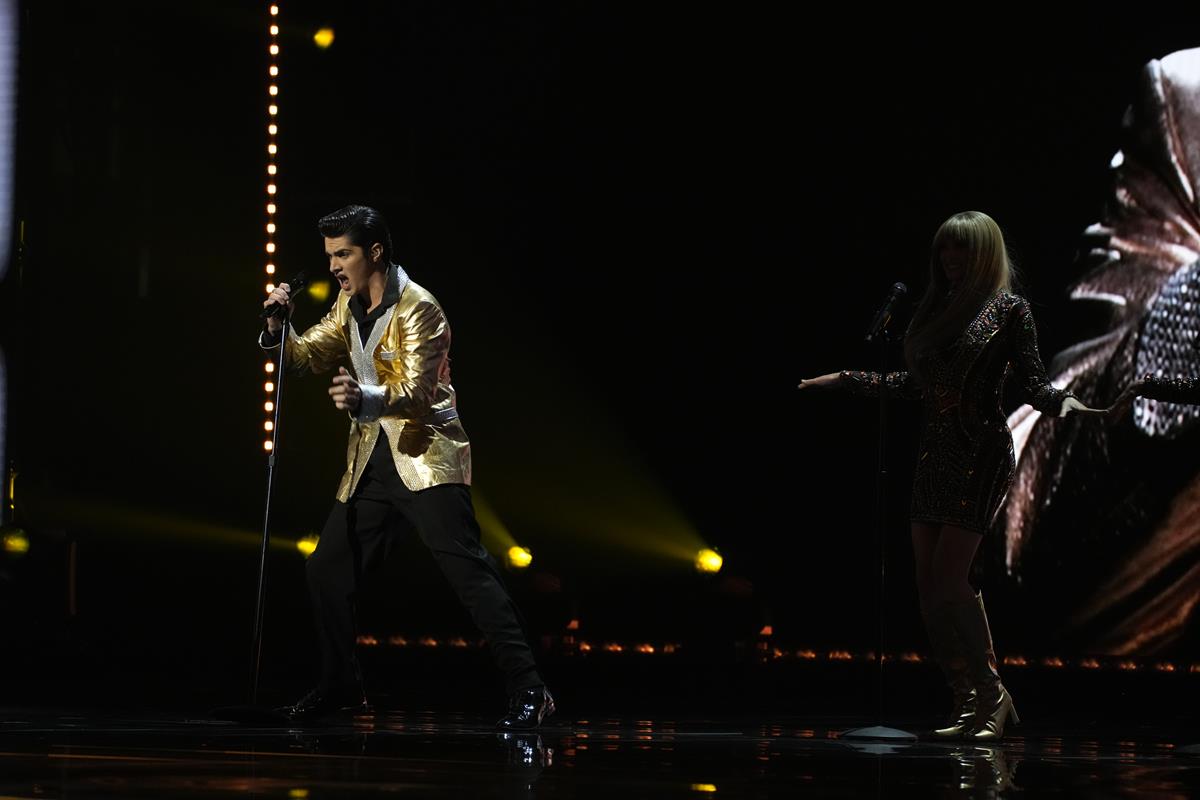

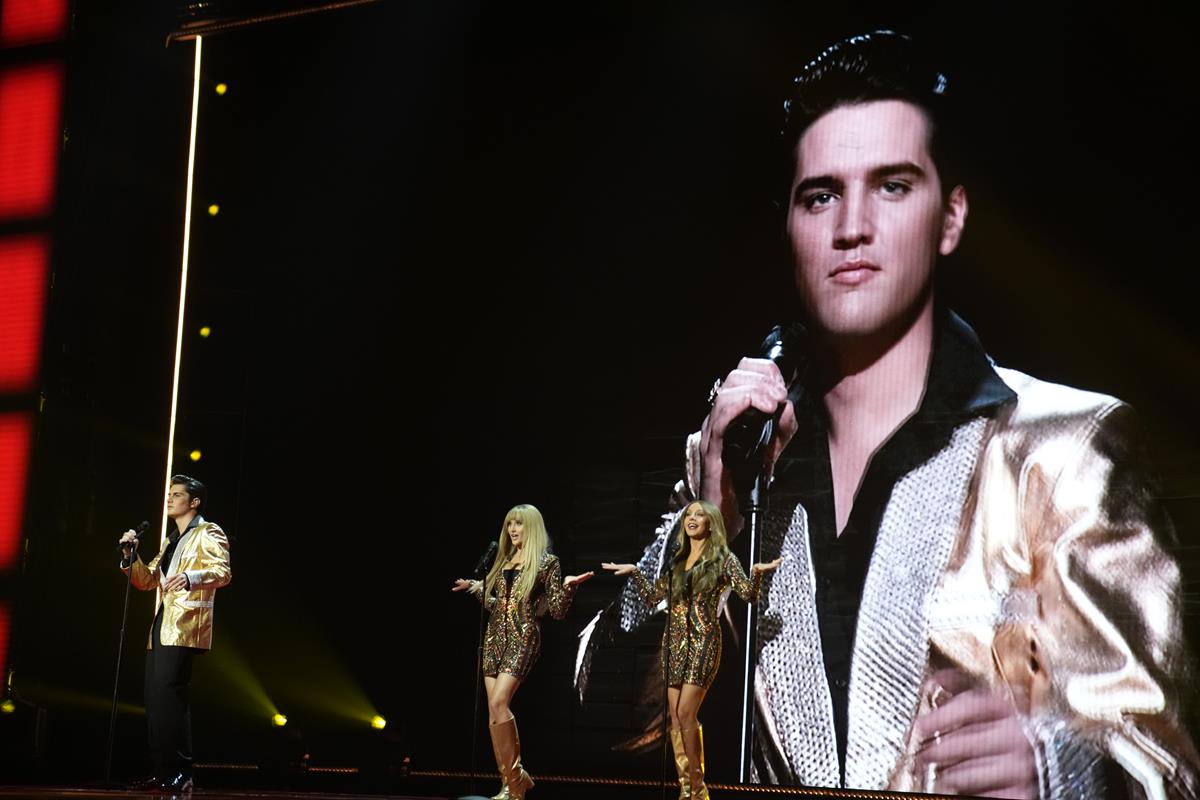

America’s Got Talent presented Elvis singing “live” in its 17th season finale, using deepfake technology developed by Metaphysic.

If you haven’t seen “Simon Cowell” performing Chicago’s “You’re the Inspiration” on the America’s Got Talent stage, then here it is:

That was from the audition rounds and was deemed good enough by the judges to get into the semis and then the final.

In the season finale, this is what Metaphysic, the company behind the technology, did to Elvis, Cowell, and his fellow judges Heidi Klum and Sofia Vergara:

As you can see, this needs cameras to be placed directly in front of the actual performer’s faces for the effect to work.

But this is live TV in front of a live audience and that’s pretty impressive.

Metaphysic founders Chris Umé and Tom Graham previously created the viral internet sensation DeepTomCruise.

They describe what they do as creating Synthetic Media. This is a rapidly expanding world of digital experiences and objects generated with input from artificial intelligence.

Metaphysic says it’s pioneering “hyperreal synthetic videos.”

As they explain about the Tom Cruise deepfake videos to futurist Bernard Marr, Umé spent nearly three months training an AI model on images and videos of the real Cruise, capturing him from multiple angles and in a variety of lighting conditions. Then they shot base videos using a body double, then generated the deepfake videos by combining the body double footage with actual video of Cruise’s face.

The duo followed a similar process for the performance on AGT and plan to commercialize the technology, called Every Anyone, which people can use to create their own hyperreal likenesses.

“We could create hyperreal synthetic versions of regular people, including voices and faces,” Umé told Marr.

In future, they think, as much as 80% of the time we spend online will be in these types of synthetic content interactions that will feature regular people.

Metaphysic plans to scale synthetic media and make it more accessible to regular users for use on social media, virtual education or office work, video games, and more.

“We strongly believe that our technology is a positive force within the media landscape,” the company states. “When content suddenly becomes hyperreal, it is a lot more engaging. Furthermore, we see a future where AI can break language barriers and bring communities together.”

READ MORE: Metaphysic’s America’s Got Talent experience, with Chris Ume and Tom Graham (Metaphysic)

Some critics say that popularizing deepfakes is dangerous. They worry that bad actors can use the technology to spread lies and disinformation.

According to a report from the Massachusetts Institute of Technology, deepfakes can be “a perfect weapon for purveyors of fake news who want to influence everything from stock prices to elections.”

READ MORE: Artificial intelligence system could help counter the spread of disinformation (MIT News)

“What we hope doesn’t happen,” Graham tells Marr, “is that large corporations or bad actors use [your] data to create versions of you without your consent, your permission, or without your direct interaction. What we’re trying to create with Every Anyone is a way for individuals to own and control your data, the data that you bring into the internet and use to train content creation. The algorithm should belong to you, and you should be able to delete it.”

Metaphysic says its tool will safeguard user’s private biometric face and voice data.

It’s Graham’s hope that by raising awareness of the technology on primetime TV “that media can be manipulated, and maybe you should take a beat before you send that [content] on or before you see some kind of video online and you become outraged or have a particular emotional reaction.”

Peacock series The Capture called into question our cast-iron belief in the veracity of CCTV and surveillance cameras to record and prosecute crime. Time reviewer Judy Berman called it the only must-see Peacock original.

READ MORE: Peacock Is Spreading Its Wings With 9 Original Series. Here’s the One You Should Watch (Time)

The first season of the drama explained how even seemingly live video could be switched out for false recordings with the stitch masked by a bus or truck crossing the screen. That’s a technique familiar to editors since Alfred Hitchcock used it to create the impression of a single take drama in Rope (1948). The second season focused on machine vision and AI to automate process.

Hacking the feed of security cameras is also staple of heist movies from Mission Impossible to Oceans Eleven but in those films the technology is presented as state of the art and one that only impacts on the integrity of a vault. In The Capture, the public at large is being duped by a state intelligence apparatus using ultra realistic deepfakes to stage alternate realities.

Paranoia makes for a good drama, but The Capture’s premise feels all too possible.

EXPLORING ARTIFICIAL INTELLIGENCE:

With nearly half of all media and media tech companies incorporating artificial intelligence into their operations or product lines, AI and machine learning tools are rapidly transforming content creation, delivery and consumption. Find out what you need to know with these essential insights curated from the NAB Amplify archives:

- AI Is Going Hard and It’s Going to Change Everything

- Thinking About AI (While AI Is Thinking About Everything)

- If AI Ethics Are So Important, Why Aren’t We Talking About Them?

- Superhumachine: The Debate at the Center of Deep Learning

- Deepfake AI: Broadcast Applications and Implications