READ MORE: How Today’s AI Art Debate Will Shape the Creative Landscape of the 21st Century (Alberto Romero)

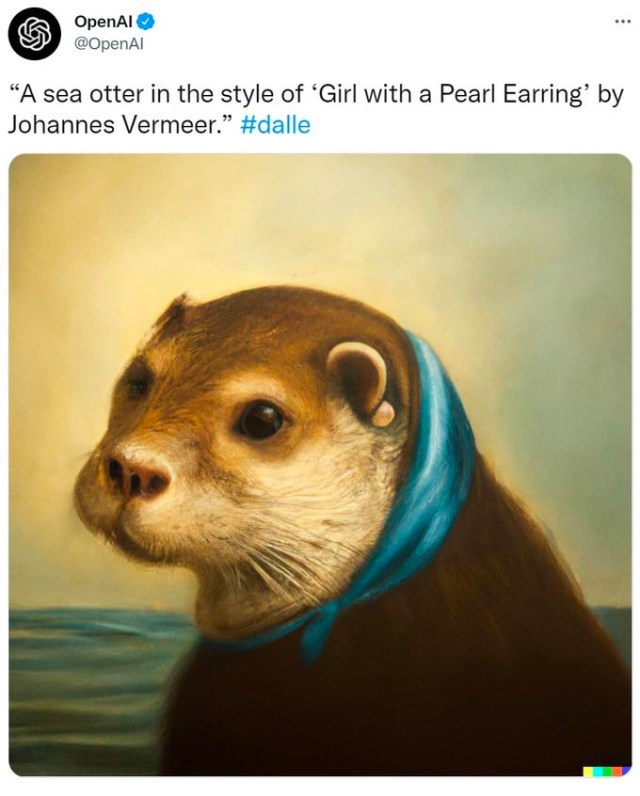

Last month OpenAI announced that it is releasing DALL-E 2 as an open beta. This means anyone using it will be able to use the generated images commercially. Midjourney and Stable Diffusion, two other comparable AI models, will allow this too. Paid members of Midjourney can sell their generations and Stable Diffusion is open source — which means that very soon, developers will have the tools to build paid apps on top of it.

“Soon, these paid services will become ubiquitous and everyone working in the visual creative space will face the decision to either learn/pay to use them or risk becoming irrelevant,” says Alberto Romero, an analyst at CambrianAI, blogging on Medium.

AI-created text-to-image models have risen to the fore this year. Soon anyone will have the chance to experience the emergent AI art scene. Most people will use these models recreationally to see what the fuss is about. Others plan to take advantage professionally and/or for commercial purposes and here’s where the debate starts.

“All these tech companies have one thing in common: They all take advantage of a notorious lack of regulation. They decide. And we adapt.”

— Alberto Romero

Some artists have recently been vocal about the negative impact of AI models.

OpenAI scraped the web without retributing artists to feed an AI model that would then become a service those very same artists would have to pay for, said 3D artist David OReilly in an Instagram post:

Artist Karla Ortiz argued in a Twitter thread that companies like Midjourney should give artists the option to “opt-out” from being used explicitly in prompts intended to mimic their work.

Concept artist and illustrator RJ Palmer tweeted: “What makes this AI different is that it’s explicitly trained on current working artists. [It] even tried to recreate the artist’s logo of the artist it ripped off. As an artist I am extremely concerned.”

So, do they have a point?

Romero puts this into context. In his view, all AI art models have two features that essentially differentiate them from any other previous creative tool.

First, opacity. Meaning: we don’t know precisely or reliably how AIs do what they do and we can’t look inside to find out. We don’t know how AI systems represent associations of language and images, how they remember what they’ve seen during training, or how they process the inputs to create those impressive original visual creations.

He has some examples of this using the same text which produced different outputs from the Midjourney AI, but not even Midjourney can explain the exact cause and effect.

“In the case of AI art, the intention I may have when I use a particular prompt is largely lost in a sea of parameters within the model. However it transforms my words into those paintings, I can’t look inside to study or analyze. AI art models are (so far) uninterpretable tools.”

EXPLORING ARTIFICIAL INTELLIGENCE:

With nearly half of all media and media tech companies incorporating Artificial Intelligence into their operations or product lines, AI and machine learning tools are rapidly transforming content creation, delivery and consumption. Find out what you need to know with these essential insights curated from the NAB Amplify archives:

- This Will Be Your 2032: Quantum Sensors, AI With Feeling, and Life Beyond Glass

- Learn How Data, AI and Automation Will Shape Your Future

- Where Are We With AI and ML in M&E?

- How Creativity and Data Are a Match Made in Hollywood/Heaven

- How to Process the Difference Between AI and Machine Learning

The second feature that makes AI art models different is termed “stochasticity.” This means that if you use the same prompt with the same model a thousand times, you’ll get a thousand different outputs. Again, Romero has an example of 16 red and blue cats Midjourney created using the same input. They are similar, but not the same. The differences are due to the stochasticity of the AI.

“Can I say I created the above images with the help of a tool? I don’t know how the AI helped me (opacity) and couldn’t repeat them even if I wanted to (stochasticity). It’s fairer to argue that the AI did the work and my help was barely an initial push.”

But why does it matter if AI art models are different from other tools? Because there’s a distinct lack of regulation on what the companies that own the AI can or can’t do regarding the training and deployment of these models.

“In the case of AI art, the intention I may have when I use a particular prompt is largely lost in a sea of parameters within the model. However it transforms my words into those paintings, I can’t look inside to study or analyze. AI art models are (so far) uninterpretable tools.”

— Alberto Romero

OReilly is right, agrees Romero. OpenAI, Google, Meta, Midjourney, and the like have scraped the web to amass tons of data. Where does that data come from? Under which copyright does it fall? How can people license their AI-generated creations? Do they ask for permission from the original creators? Do they retribute them? Many questions with unsatisfactory answers.

Operating models and goals may vary, “but all these tech companies have one thing in common: They all take advantage of a notorious lack of regulation. They decide. And we adapt,” says Romero.

This is a recipe for disaster, because companies, and by extension users and even the models themselves, can’t be judged “when the rules we’d use to judge it are non-existent,” he says.

“To the opacity and stochasticity of AI art models, we have to add the injudgeability of tech companies that own those models. This further opens the doors to plagiarism, copying, and infringement of copyright — and copyleft — laws.”

Inspiration or Copying?

This becomes a live issue if artists whose work has been fed into an AI for training now spews out identical copies of that artist’s work for other artists to sell or pass as their own.

“AI art models can reproduce existing styles with high fidelity, but does that make them plagiarizers automatically?” Romero poses.

Artists learn by studying greater artists. They reproduce and mimic other people’s work until they can grow past that and develop their personal style. Asking the AI for another artist’s style is no different.

Here, Romero doubles back on his own argument. The two features of opacity and stochasticity that he says made AI art models stand out from other creative tools, are also shared by humans.

“Human brains are also opaque — we can’t dissect one and analyze how it learns or paints — and stochastic — the number of factors that affect the result is so vast, and the lack of appropriate measuring tools so determinant, that we can consider a human brain non-deterministic at this level of analysis.”

“To the opacity and stochasticity of AI art models, we have to add the injudgeability of tech companies that own those models. This further opens the doors to plagiarism, copying, and infringement of copyright — and copyleft — laws.”

— Alberto Romero

So according to Romero’s own logic, this puts human brains in the same category as AI art models. Also, and this is key to consider, both AI art models and humans have the ability to copy, reproduce, or plagiarize. Even if not to the same degree — expert artists can reproduce styles they’re familiar with, but AI’s superior memory and computation capability make copying a style a kid’s game — both can do it.

“And that’s precisely what makes us different than AI art models. Unlike them, we’re judgeable because those ‘lines of illegality’ exist to keep us in check. Regulation for humans is mature enough to precisely define the boundaries of what we can or can’t do.”

And, therefore, before we can decide what’s inspiration and what’s plagiarism, “we first have to define impartial rules of use that take into consideration the unique characteristics of AI art models, the pace of progress of these tools, and whether or not artists want to be part of the emerging AI art scene.”

AI art models that are opaque, stochastic, very capable of copying, and injudgeable can’t be subject to current frameworks of thought, Romero concludes.

“The singular nature of AI art models and the lack of regulation is an explosive mix that makes this situation uniquely challenging.”

Watch: John Oliver Details His AI-Generated Cabbage Love Affair

Should AI Art Come With a Warning Label?

READ MORE: I Don’t Want Your AI Artist (Lance Ulanoff)

Recently, Capitol Records signed rapper FN Meka, making no bones about it being a virtual TikTok star. Ten days later the record company had ditched the AI character after an outcry about racial stereotyping.

READ MORE: Capitol Records Signs Virtual Rapper FN Meka (Cybernews)

Yet what should bug us just as much is the attempt to pass off an artificial computer generated avatar as having genuine experience of the urban life and culture it promoted.

FN Meka is an AI-generated rapper avatar developed in 2019 by Anthony Martini and Brandon Le of Factory New, with music created by AI by the music company Vydia. The voice was real, but everything from the lyrics to the music was AI.

The character has more than 500,000 monthly Spotify subscribers and more than one billion views on its TikTok account, where Factory New sells NFTs and posts CG videos of FN Meka’s lifestyle, including Bugatti jets, helicopters and a Rolls Royce custom fit with a Hibachi grill. Its Instagram account has more than 220,000 followers.

Hours before Capitol fired the “artist,” The Guardian reports, Industry Blackout, a Black activist group fighting for equity in the music business, released a statement addressed to Capitol calling FN Meka “offensive” and “a direct insult to the Black community and our culture. An amalgamation of gross stereotypes, appropriative mannerisms that derive from Black artists, complete with slurs infused in lyrics.”

This included use of the “n” word. While the AI rapper was accused of being a gross racial stereotype, just as pertinently it clearly had no lived experience of what it was rapping about.

READ MORE: Capitol Records drops ‘offensive’ AI rapper FN Meka after outcry over racial stereotyping (The Guardian)

For tech commentator Lance Ulanoff, this episode highlights a fundamental flaw in the AI-as-artist experience.

“AI art is based on influences from art the machine learning has seen or been trained on from all over the internet,” he writes in a post on Medium.

“The problem is that the AI lacks the human ability to react to the art it sees and interpret it through its emotional response to the art. Because it has… no… emotions.”

Ulanoff is making a distinction between human-made art and the creations we might call art generated by increasingly sophisticated AI models like Dall-E 2.

Genuine art, he maintains, is not simply representational or an interpretation or — more reductively — a collage.

“An artist creates with a combination of skill and interpretation, the latter informs how their skill is applied. Art not only elicits emotion, but it also has it embedded within it,” he says.

By contrast, AI mimics this by borrowing paint strokes, lines, and visual styles from a million sources. But none of them — not one — is its own.

“AI art is based on influences from art the machine learning has seen or been trained on from all over the internet. The problem is that the AI lacks the human ability to react to the art it sees and interpret it through its emotional response to the art. Because it has… no… emotions.”

— Lance Ulanoff

That was the flaw with FN Meka. “It could create a reasonable rap but was not informing it through its own experiences (which do not exist), but instead those it saw elsewhere. It’s the essence of a million other lives lived in a certain style and with so many computational assumptions thrown in.”

Another artistic pursuit, AI writing, is no less fraught with false humanity. Any decent AI can write a workable news story, Ulanoff points out. An article in Forbes demonstrates that many AIs already do create extremely efficient article indistinguishable from one written by a person.

READ MORE: Did A Robot Write This? How AI Is Impacting Journalism (Forbes)

“But these stories offer zero insight and little if any, context,” opines Ulanoff. “How can they? You only do that through lived, not artificial or borrowed, experiences.”

The critic has titled his post “I Don’t Want Your AI Artist,” but as he acknowledges it already difficult to determine at face value whether an image, a story, a piece of music is the creation of a machine (albeit based on lived experiences) and the genuine article.

Do we then need to label the creations of an AI, just as we would barcode a product in a shop, with details of its fabricator?

And if so (and I’m not delving into the philosophical or practical implications of this here) then we would need to act fast since AI art is populating our cultural landscape as we speak.