Photoreal full-body synthetic humans, whether fictional or the digital twin of a real person, are coming to a screen near you.

The next evolution of the digital human is going to have a more in-depth relationship with us because, not only will it be photoreal, it will be able to connect with us emotionally, said a panel of industry experts at the 2024 NAB Show in a session entitled “The Case for Digital Humans.” (Watch the full video here.)

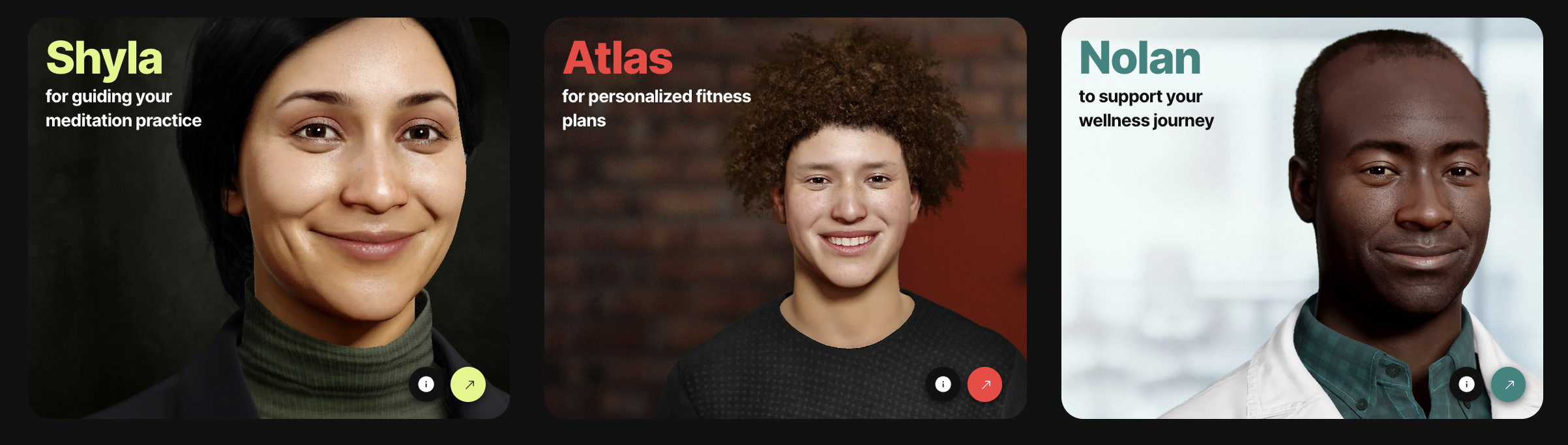

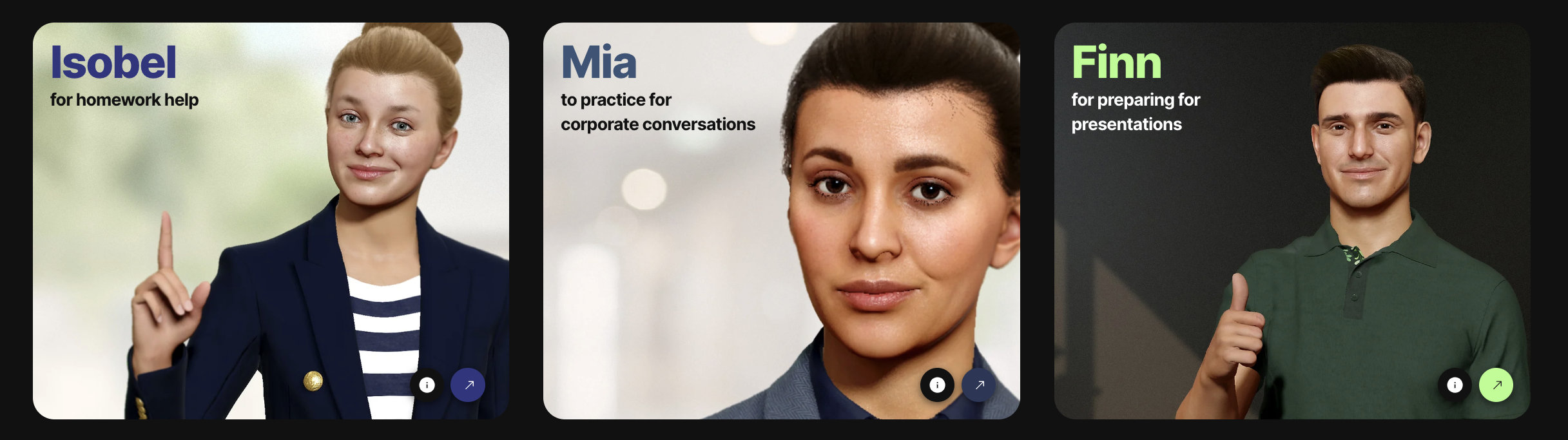

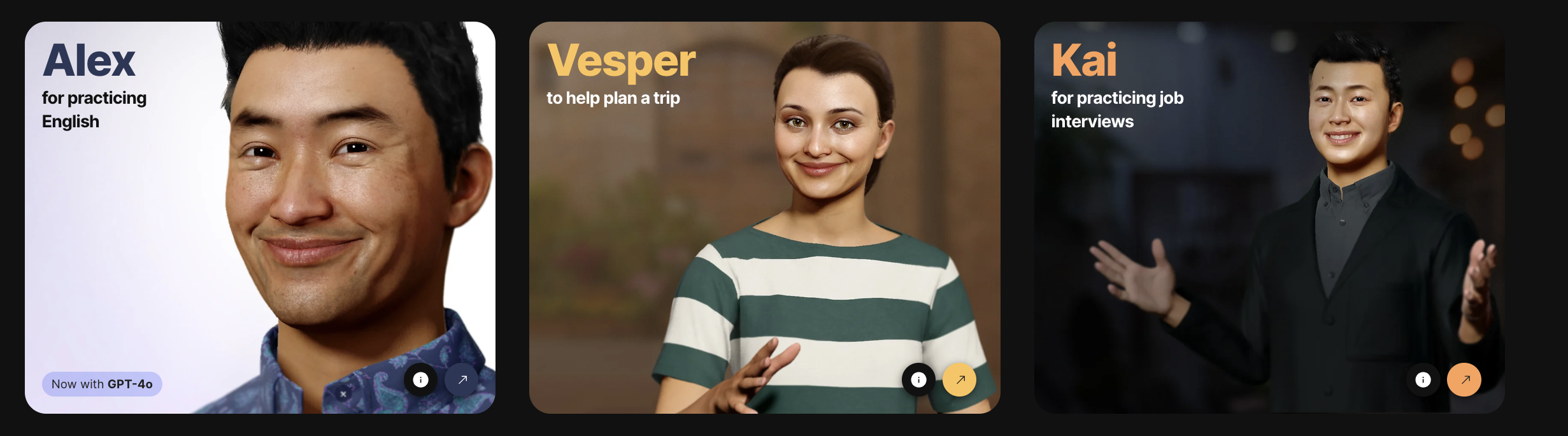

Soul Machines aims to build digital humans which deliver a more “empathetic, humanistic experience” with AI, said Fay Wells, the company’s head of partner marketing.

“We really think about them as that next layer of human interactivity,” Wells explained. “We humanize AI through embodied agents with biological AI. What we believe is that this is really a path to superhuman intelligence, and more human like experiences with AI that sit at the intersection of both physical and digital worlds.

Soul Machines, she said, has created a tool that “democratizes” the creation of digital humans. “With Soul Machine Studio, you can create, customize, train and deploy digital humans.”

She further explained that its technology is built on cognitive modeling.

“What happens in the human brain corresponds with the human face so when you interact with these avatars, they can see [your facial responses], process that and react accordingly.”

It claims to be able to create digital people that can “see, hear, interact, empathize and even create memories the same way as real humans.”

Soul Machines CEO and co-founder Mark Sagar is the director of the Laboratory for Animate Technologies at the Auckland Bioengineering Institute and a two-time Academy Award winner for Avatar and King Kong. Sager has pioneered more humanlike ways in which we relate to technology and AI by combining biologically based models of faces and neural systems to create live interactive systems.

“Effectively the technology works through physical camera and audio interface,” Wells said. “For example, if you were having a conversation with a digital human, and you smiled, they would recognize that and they would smile, if you frowned or looked upset, they would risk that they would recognize that and do that.”

The tech is already in demand, she said, and use cases are growing including by celebrities seeking an opportunity to make money by being in more than one place at once.

“Typically, celebs are looking for ways to extend their brand’s interaction with their fans but they want to do this with money attached to it, right. With digital humans we unlock a lot of revenue opportunities. You can use something like a subscription model to have access to one of these celebs to talk about different things [in promotional contexts].

“You can have a digital human as a brand ambassador or a customer service person. They can do that 24/7 so you’re not just confined to that nine to five space.”

Wells claimed that customer satisfaction levels and therefore brand loyalty increases with the use of more empathetic digital humans.

While the digital humans of Soul Machines start life as synthetic and learn to empathize, the lifelike avatars of Wild Capture are drawn from the more traditional process of performance capture.

Wild Capture claims its AI-infused pipeline, however, has sped up the typically time- and resource-consuming process.

Co-founder and CEO Will Driscoll explained that Wild Capture utilizes a streamlined volumetric video solution to deliver “interoperable, lifelike digital human assets of individual talent” for use in crowd scenes with “unparalleled realism” across sectors like fashion, sports, automotive and training.

“As the spatial media landscape evolves rapidly, we’re actively exploring avenues for talent to monetize their scanned captures beyond traditional production,” Driscoll said.

His company recently worked on Francis Ford Coppola’s movie Megalopolis, delivering 200 digital assets including characters integrated throughout the film.

“We’re in the live performance to capture asset process,” he said. “We’re dealing with the authentic performance derived directly from the original performance of the person.

“We’ve automated the process and made the modification process easy for all walks of production. We’re able to build story arcs to make them interactive, but they’re not going to answer questions on the fly.”

Driscoll said this was the key difference between Wild Capture and Soul Machines. “However, if you need an unadulterated message from a politician, a pop star or an athlete, seeing their true nuances of their authentic performance, we’re able to bring it in real time to the web.”

He emphasized the company’s engagement with digital rights management and copy right as well as with individual talent whether living or deceased.

“By getting the talent to embrace it, rather than fearing it, you get more quality content produced,” Driscoll said. “We’ve worked with talent to help them monetize their own likeness. We catalog all the metadata, bringing that through the entire process.

“Managing that data ethically, on behalf of the creative community is incredibly important, because those are our users and creators.”

He said there was likely to be increased demand for digital humans as film and gaming merge.

“We’re on that precipice where cinema and games come together to create an interactive story. We’ve automated and simplified the process of constructing characters from the footage.”

Why subscribe to The Angle?

Exclusive Insights: Get editorial roundups of the cutting-edge content that matters most.

Behind-the-Scenes Access: Peek behind the curtain with in-depth Q&As featuring industry experts and thought leaders.

Unparalleled Access: NAB Amplify is your digital hub for technology, trends, and insights unavailable anywhere else.

Join a community of professionals who are as passionate about the future of film, television, and digital storytelling as you are. Subscribe to The Angle today!